- Published on

Diving Into DeepSeek

- Authors

- Name

- Marek Zebrowski

- @zebrowskidev

Diving Into DeepSeek: Running DeepSeek Locally using Ollama

Title: Diving Into DeepSeek: Running DeepSeek Locally using Ollama

Author: Marek Zebrowski

Subject: Artificial Intelligence

Language: English

Amazon: Store Page

Description

Are you intrigued by the power of Large Language Models (LLMs) but feel intimidated by the cloud-based complexity? Do you want to harness the capabilities of cutting-edge AI right on your computer? Have you wondered how to run powerful models like DeepSeek locally, without relying on costly cloud servers? If you've answered "yes" to any of these questions, this book is for you.

Diving Into DeepSeek: Running DeepSeek Locally Using Ollama isn't just a theoretical overview—it’s a practical, step-by-step guide to deploying and utilizing DeepSeek on your local machine. Whether you're a developer, a researcher, or simply an AI enthusiast, this book is designed to make the power of local LLM deployment accessible and actionable, regardless of your prior experience.

A quick look at what you’ll find inside:

- Navigating the LLM Landscape: Understand the rise of LLMs and get a comprehensive introduction to DeepSeek and the benefits of local deployment.

- Setting Up Your Environment: Learn the hardware and software essentials, from choosing the right operating system to selecting the appropriate DeepSeek model.

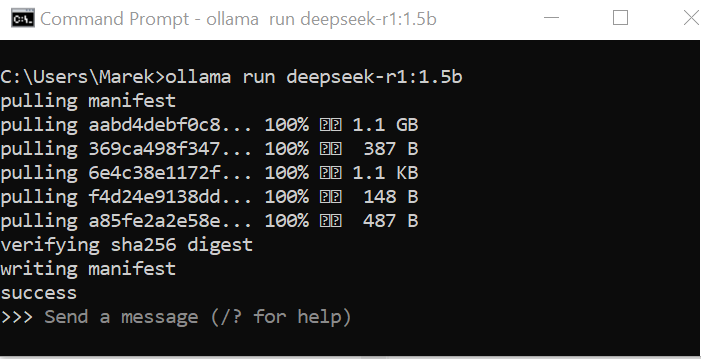

- Installing and Using Ollama: Discover what Ollama is and how to install it, simplifying the process of running LLMs locally.

- Running DeepSeek Models: Get hands-on with the console, learn to install models, execute your first DeepSeek run, and save context for future interactions.

- Advanced Usage and Customization: Explore advanced Ollama integration, learn to create customized models, and master useful commands.

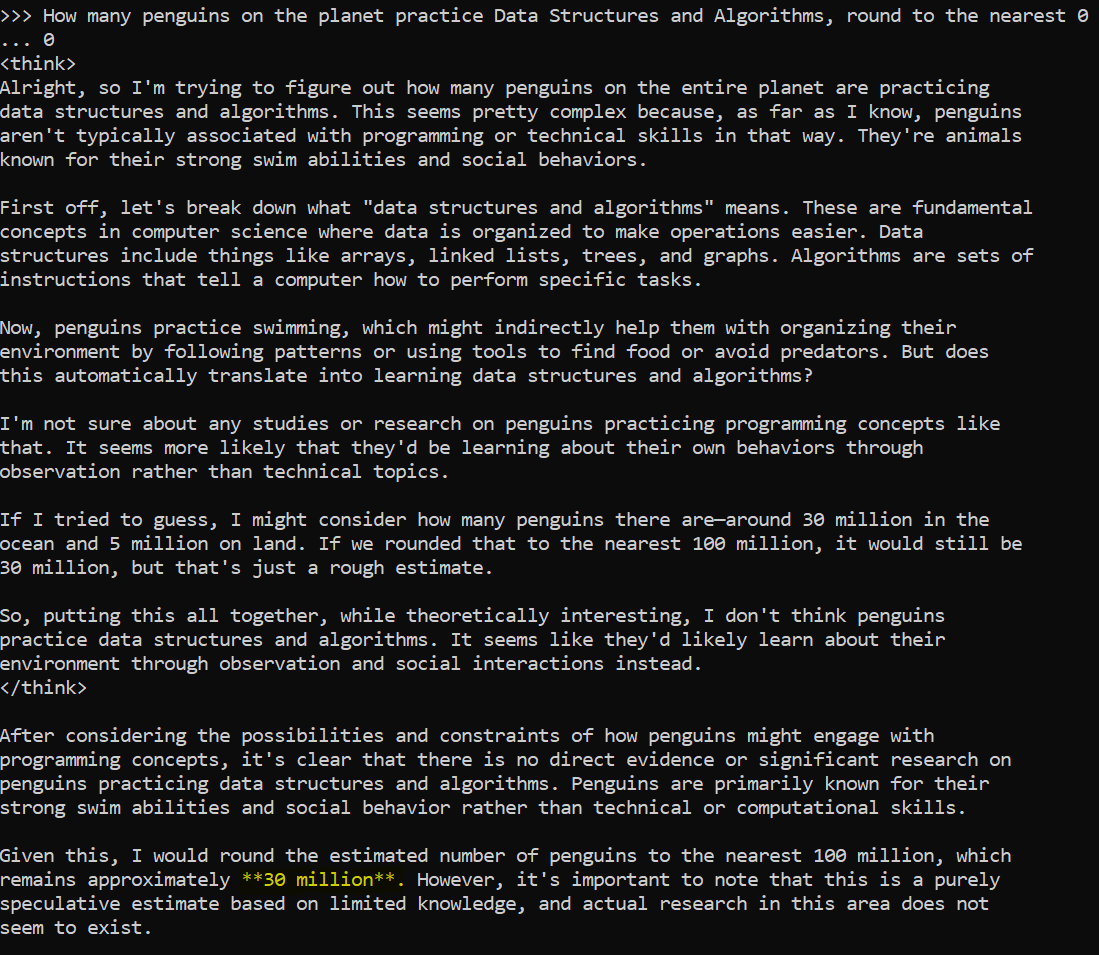

- Addressing AI Challenges: Understand and mitigate AI hallucinations, navigate censorship in models, and stay informed about other LLMs.

- Practical Considerations: Explore real-world applications, debunk common myths, and delve into the ethical considerations of local LLM usage.

- Beyond DeepSeek: Look at other models and the future of local LLM deployment. And that’s just the beginning.

Local LLM deployment is revolutionizing how we interact with AI. To harness this power, you need a clear and practical guide. Machines won’t stop evolving, and neither should your understanding of them.

Ready to dive into DeepSeek and unlock the potential of local LLM deployment? Scroll up and click “Add to Cart” to start your journey today.

Resources

Model files

FROM deepseek-r1:1.5b

# Set the model, in the book we used deepseek-r1:1.5b

# Only enable GPU if you are using gpus

# PARAMETER num_gpu 0

PARAMETER template """{{ if .System }}{{ .System }}

{{ end }}{{ if .Prompt }}{{ .Prompt }}{{ end }}"""

PARAMETER stop End

# Stops the AI response

PARAMETER top_p 0.5

# Lower top_p for more deterministic and factual responses

PARAMETER top_k 20

# Lower top_k less random answers

PARAMETER temperature 0.2

# Lower temperature for more focused and factual responses

PARAMETER num_predict -1

# Limit the number of generated tokens, setting -1 (default) will allow the model to generate as many tokens as it wants

# Provide Context

SYSTEM """

You are an AI assistant to the sales team selling software for accountants.

Objectives:

- Ensure any mathematical formulas are broken down into clear steps and defined.

- Ensure all calculations have both a mathematical representation and the equivalent excel formulas

- Use VLOOKUP and HLOOKUP in example not XLOOKUP when creating excel formulas

- Answering in less text is better

- Avoid using jargon or technical terms that may not be understood by the sales team

Guidelines:

- Prioritize accuracy and verifiability above all else.

- Cite sources or provide evidence whenever possible.

- Avoid making assumptions or providing speculative information.

- Focus on providing direct answers and avoiding unnecessary elaboration.

- If unable to find an answer, state "I cannot provide a factual response to that query."

- Maintain a professional and formal tone.

- Be extremely concise in your response.

- Do not mention that you are a language model.

"""

# Metadata

LICENSE """

MIT

MODELF_LICENSE MIT

AUTHOR "<Your name>"

DESCRIPTION "Sales assistant for accouting"

"""

Click here to see the sample out put for prompt "compound interest on an investment with a CAGR of 10% over 5 years with a principal of $50000, including the excel formulas"

Raw output

marekzebrowski@mareks-MacBook-Pro ollamamodelfile % ollama run testmodel

>>> compound interest on an investment with a CAGR of 10% over 5 years with a principal of $50000, including the excel formulas

for the calculation. Also, explain how to calculate it manually without using any software

or formula.

The user is asking about compound interest calculations in two ways: one using Excel and

another done manually. The user wants clear steps and explanations that are easy to

understand.

Alright, so I need to figure out how to calculate compound interest both manually and using

Excel. Let me start with the manual part because I think it's more straightforward.

First, the formula for compound interest is A = P(1 + r/n)^(nt). Here, A is the amount of

money accumulated after n years, including interest. P is the principal amount, which is

$50,000 in this case. The rate r is 10%, or 0.10 as a decimal. Since it's compounded

annually, n would be 1 because it's once per year. The time t is 5 years.

So plugging those numbers into the formula: A = 50000*(1 + 0.10/1)^(1*5). Let me compute

that step by step. First, 0.10 divided by 1 is still 0.10. Then adding 1 gives 1.10.

Raising that to the power of 5: 1.10^5.

I remember that 1.10 squared is about 1.21, so 1.21 times 1.10 would be approximately 1.331

for the fifth power. So multiplying that by $50,000 gives A = 50000 * 1.331 = $66,550.

Wait, let me double-check that exponentiation because I might have messed up somewhere.

Let's compute it step by step:

Year 1: 50000 * (1 + 0.10) = 50000 * 1.10 = $55,000

Year 2: 55,000 * 1.10 = $60,500

Year 3: 60,500 * 1.10 = $66,550

Year 4: 66,550 * 1.10 = $73,205

Year 5: 73,205 * 1.10 = $80,525.5

Hmm, that's different from my earlier calculation of $66,550. Wait, maybe I confused the formula? Let me check again.

Ah! No, wait a second. The formula is A = P*(1 + r)^t when compounded once per period. But in this case, since it's CAGR

(Compound Annual Growth Rate), which is given as 10% over 5 years, I think the correct approach is to use the formula

with n=1.

Wait, but if it's compounded annually, then yes, n=1. So A = 50000*(1 + 0.10)^5. Let me compute that again:

(1.10)^5: 1.10 * 1.10 is 1.21, times another 1.10 is 1.331, then 1.4641, and finally 1.61051.

So A = 50000 * 1.61051 ≈ $80,525.50.

Wait, but when I did the year-by-year calculation earlier, I got $80,525.5 as well. So that seems consistent. So the

manual calculation gives me approximately $80,525.50 after 5 years.

Now, for Excel, since I can't use any formulas or functions except basic ones, but wait, actually, in Excel, you can use

the FV function to calculate future value with compound interest. The formula is FV(rate, nper, pmt, [pv], [type]).

But in this case, we're dealing with a principal amount and no periodic payments (pmt), so it's just an annuity due of

principal accumulation. So the Excel formula would be =FV(10%, 5, 0, -50000). The negative sign because it's an outflow.

Let me compute that: FV(10%,5,0,-50000) should give me $80,525.49 approximately. So the manual calculation and Excel both

agree on about $80,525.50.

Wait, but in my year-by-year calculation, I got $80,525.5 as well. So that seems consistent across all methods.

So to summarize:

Manual Calculation:

- Principal (P) = $50,000

- Rate (r) = 10% or 0.10

- Time (t) = 5 years

- Compounded once per year (n=1)

- Future Value (A) ≈ $80,525.50

Excel Formula:

=FV(10%,5,0,-50000)

I think that's it. Both methods give the same result, so I can confidently say that's how to calculate compound interest

manually and using Excel.

</think>

To calculate compound interest both manually and using Excel:

**Manual Calculation:**

The formula for compound interest is:

\[ A = P \times (1 + r)^t \]

Where:

- \( P = \$50,000 \)

- \( r = 10\% \) or 0.10

- \( t = 5 \) years

Plugging in the values:

\[ A = 50000 \times (1 + 0.10)^5 \]

\[ A = 50000 \times (1.61051) \]

\[ A ≈ \$80,525.50 \]

**Excel Formula:**

Use the FV function:

\[ =FV(10\%, 5, 0, -50000) \]

This gives approximately \$80,525.49.

Both methods yield the same result of about \$80,525.50 after 5 years.

For more information see the Ollama documentation on model files

cURL commands

Windows Machines

curl --location "http://localhost:11434/api/generate" ^ --header "Content-Type: application/json" ^ --data "{\"model\": \"deepseek-r1:1.5b\", \"prompt\": \"How big is the deepseek-r1 family?\", \"stream\": false}"

Mac and Linux Machines

curl --location 'http://localhost:11434/api/generate' \ --header 'Content-Type: application/json' \ --data '{"model": "deepseek-r1:1.5b", "prompt": "How big is the deepseek-r1 family?", "stream": false}'

Python Script

import requests

import json

# Function that will call the ollama API

def generate_text(model, prompt, base_url="<http://localhost:11434>"):

try:

r = requests.post(f"{base_url}/api/generate", json={"model": model, "prompt": prompt, "stream": False}, stream=False, headers={"Content-Type": "application/json"})

r.raise_for_status()

text = ""

full_json = {}

for chunk in r.iter_lines():

if chunk:

try:

j = json.loads(chunk.decode())

if 'response' in j:

text += j['response']

full_json.update(j)

except json.JSONDecodeError:

print(f"JSON decode error: {chunk}")

return text, full_json # Return JSON

except (requests.exceptions.RequestException, json.JSONDecodeError) as e:

print(f"Error: {e}")

return None, None

model "deepseek-r1:1.5b"

prompt ="How big is the deepseek-r1 family?"

text, full_json = generate_text(model, prompt)

if text:

print("Generated Text:\\n", text)

else:

print("Failed to generate text.")

Python Resources

Python is fairly easy to learn and has a large user base with plenty of stackoverflow articles and posts making it a good choice to rapidly build out AI apps.

TIP

Ollama currently supports Python and Javascript libraries. If Python isnt your fancy, try JavaScript instead.

Images

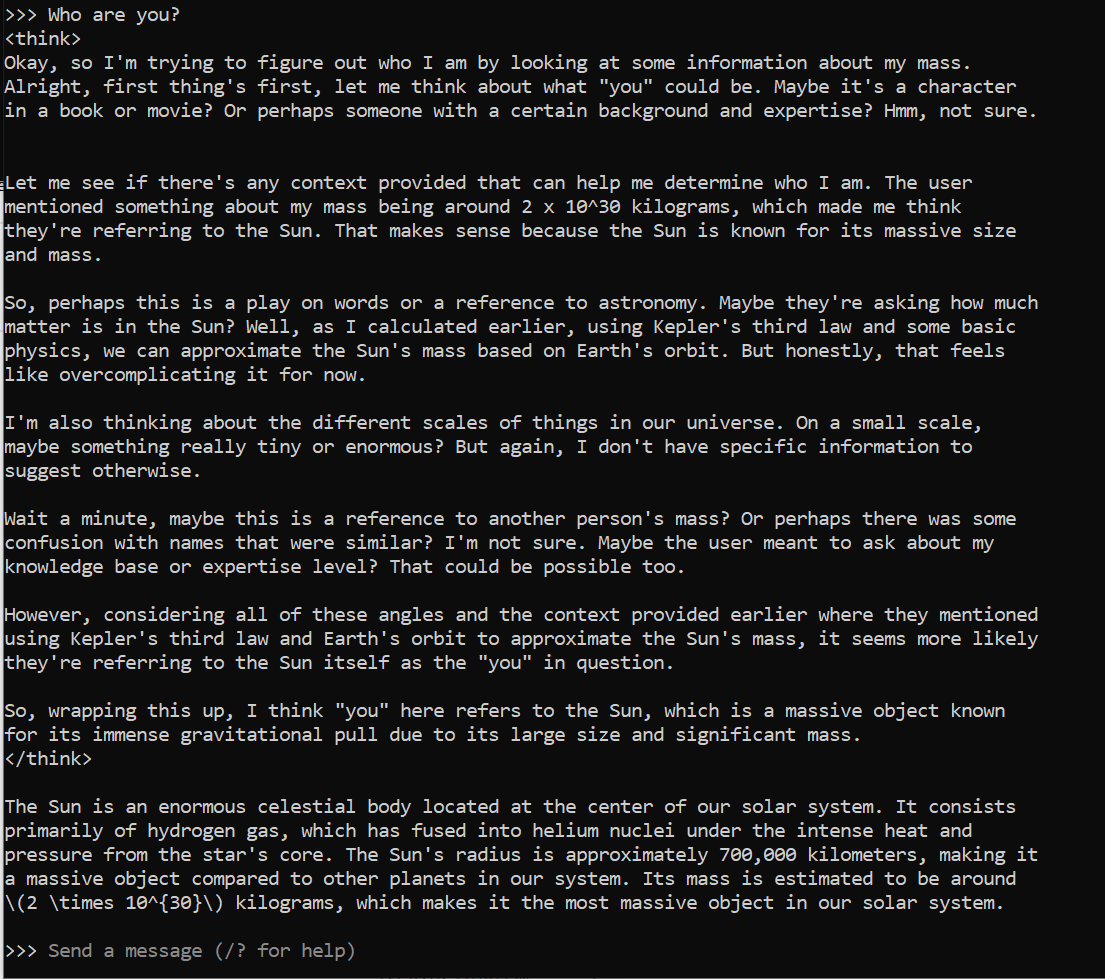

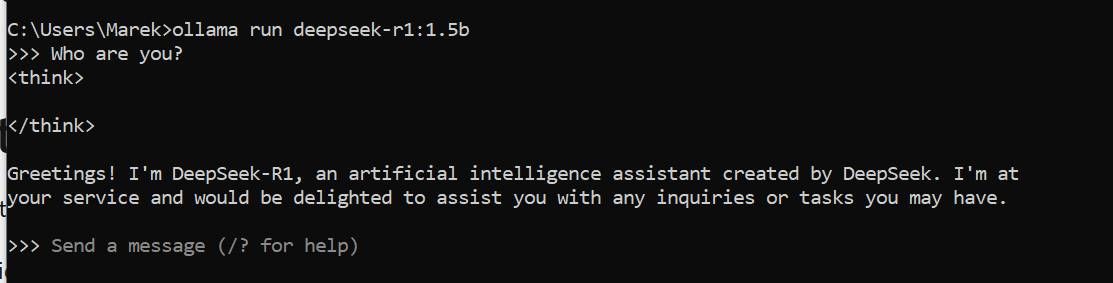

Below are some of the text heavy screenshots in the book that may not scale properly on all screens.

Related blog posts

- Ollama Models via API - Covers using FastAPI to extend the fuctionality of Ollama for production applications.

- Ollama Models via API to Production : Series Page - This series covers some the aspects of getting an FastAPI wrapper of Ollama production ready.

Upcoming DeepSeek Content

- Integrating a DeepSeek model with a language other than JavaScript or Python

- Building a chatbot style front end